Migrations.

That word alone is enough to strike fear, or at least a mild headache, in any developer who has had to do a lot of migrations. While it's probably never super fun to move website data from one place to another and get everything to work and look nicely, with MODX CMS you at least have a ton of options for how to do it.

I'd previously posted on the modx blog about "best practices" for moving an entire site. There's also a video here on how to do it with the Teleport toolset.

To take it a step further though, the MODX platform and PHP together provide all the tools you need to perform custom migration tasks, including selectively migrating content or data, rather than an entire site. This post is about some of those tools.

NOTE: Many, if not all of the methods described here, have the potential to completely wreck a website. It goes without saying that before attempting any migration, a full backup should be taken of both the source and target site.

JSON Export

JSON is an easy-to-use format for dealing with data, as well as configuration options. This flexibility makes it a great candidate for tool-building. In this example, we'll use a config file to make "instructions" for MODX to serialize specific objects as JSON, and we'll even include some fine-grained "instructions" to control the behaviour of the import script.

Here's a sample JSON config. It'll make more sense once you take a look at the sample JSON export script.

The comments should give you a pretty good idea of what's going on, but pay special atttention to line 28 where the behaviour controlled by the "toWhere" property is described.

The export script essentially does this:

- Instantiates MODX

- Sets up some options like allowed object classes and filenames, etc

- Uses the array of objects in the

whereproperty as criteria, with which to fetch an object. If it finds one, it turns it into an array and then serializes it as JSON. - Saves the objects to a JSON export file, along with

whereconditions for each object in the target site

Let's look more closely at one of the objects in the JSON config:

{

"class": "modResource",

"where": [

{ "pagetitle:=": "Resource Pagetitle" }

,{ "deleted:=": 0 }

],

"toWhere": [

{

"id:=": 10

}

]

}

This queries for a Resource based on pagetitle and deleted state, since you might have duplicate page titles and you know you want the non-deleted one. The toWhere criteria is different though, specifying an ID. So you want this draft from your staging site to replace the Resource with a specific ID on prod, even though the draft may have an arbitrarily different ID.

This allows you some pretty powerful controls over which objects are fetched and how they are imported. You can create new objects or update existing ones with the same or different criteria. xPDO exposes SQL query language as easy-to-use JSON, making this an extremely powerful and elegant solution, out-of-the-box.

The sample JSON import script consumes the exported JSON, and uses the where property as criteria for fetching objects to update.

Some important gotchas with the example scripts:

- Collisions may happen when attempting to create new objects with the same values in unique fields, like

name, for example. I'm not sure if the save methods on all classess will validate unique fields and fail before saving, but if they don't, and you have duplicate entries with colliding values, it may cause bad errors in your site. - There's nothing in there for Element Property Sets, or Plugin Events, or Resource TVs, etc. These related objects should be collected and exported along with the primary objects, in most real-world situations. Sometimes you only want the primary objects but these cases are relatively rare.

- Your criteria may not result in the script retrieving the object you think it will. If more than one object meets the criteria, the first-matching one, based on arbitrary database internal sorting, will be returned. Double-check the JSON export file to ensure you have the correct data.

- These sample scripts have been minimally tested. I can't reiterate enough how important it is to backup before using scripts like these.

SQL Client

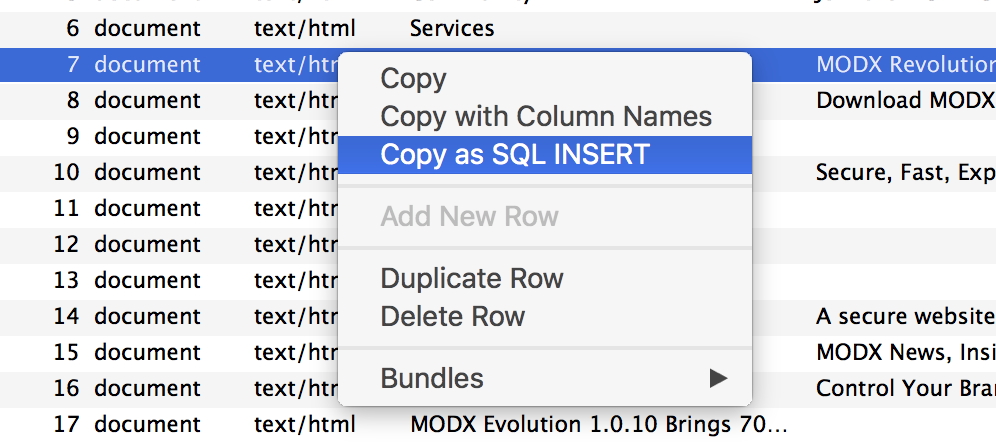

If you've never used Sequel Pro and you're on a Mac, I'd highly recommend it. It's really user-friendly and packs powerful features. One way to migrate selective bits of data is to select the row(s) you want, in the UI, and use the context-menu option "Copy as SQL INSERT".

I usually have the source DB open in one window and the target DB open in another, and paste the SQL statement into the query window:

The one gotcha with this method, is the SQL INSERT statement will have the ID of the database record, and if you're pasting that into another site, you may not want the object to have the same ID. In fact, if a record with the same ID exists, you won't be able to execute that statement—you'll get an error like Duplicate entry '7' for key 'PRIMARY'.

If you want to update a record with the same ID, you'll need to change the statement so it starts with REPLACE INTO instead of INSERT INTO. You can also select multiple records, using shift+click or command+click actions, and the copied SQL statement will contain instructions to insert them all. Be careful when editing a wall of SQL statement text like that—it's prone to errors.

CSV Export

PHP has some built-in tools for converting CSV files to arrays. This can come in handy when you want to migrate data selectively and you want to process the data programmatically as well.

Here's a gist of a primitive export-to-csv script. Again, I tried to write helpful comments, but essentially, it:

- Instantiates MODX

- Queries for Resources to export, based on template and class. You can modify the code to query on other attributes.

- Collects the specified TV values

- Outputs each Resource, and TV values, as a row in the CSV export file.

The companion import script does the reverse, creating Resources in your target site. You have the option to override any of the field values (see the comment at line 43). In this example, the TV values are not re-created in the target site, but rather stored in Resource properties for future reference. This may not be useful for your use case—it's just an example.

While it doesn't provide quite the fine-grained control that the JSON example does, it allows you to easily examine the content of the export file in a spreadsheet client to ensure it's what you want. If you only have the standard fields (no TVs) you could even import the CSV file directly into your SQL client, as most of them support CSV import.

Teleport and Endpoint

For those who have the budget, and require the most robust, reliable, scalable, and easy-to-use solution, Teleport and its related technologies can really deliver. Here are just some of the cool things I've seen developed, as part of the MODX Services Team:

- A component that tracks changes to Resources and related TVs, and lists them in a grid, where the user can select them to be packaged for export on a button-click. The package is sent via SFTP to the target server, where a custom service is running that listens for incoming packages and automatically deploys them.

- A workflow based solely on publicly available MODX Cloud Snapshot templates, where only Elements (not Resources) are sent to a cluster of load-balanced Cloud instances, when a developer needs to deploy changes.

- Synchronizing between a cluster of load balanced dedicated servers and a dev Cloud instance, using Teleport and custom Snapshot templates.

Well there you have it. It should be no surprise that MODX provides many different ways of doing things. Some give you more control, but with greater power comes greater responsibility. Choose your tools wisely, proceed with care, and MODX can help you achieve almost anything imaginable for your website or app.